For many years researchers have been trying to find ways to preserve data privacy while avoiding hindering the use of machine learning-based methods for data analysis and prediction. As a result, various privacy-preserving techniques such as SMPC (Secure Multi-Party Computation), DP (Differential Privacy), and HE (Homomorphic Encryption) have been developed in recent years, addressing the balance between data utility and privacy. Overall, promising results have been obtained using these techniques. However, adoption and applicability typically depend on the use case, as each technique introduces a trade-off between performance and privacy.

For many years researchers have been trying to find ways to preserve data privacy while avoiding hindering the use of machine learning-based methods for data analysis and prediction. As a result, various privacy-preserving techniques such as SMPC (Secure Multi-Party Computation), DP (Differential Privacy), and HE (Homomorphic Encryption) have been developed in recent years, addressing the balance between data utility and privacy. Overall, promising results have been obtained using these techniques. However, adoption and applicability typically depend on the use case, as each technique introduces a trade-off between performance and privacy.

What's Homomorphic Encryption?

In the simplest terms, HE in an encryption way that addresses the issue of preserving data privacy in ML-based analysis. How can you do this? HE data can be manipulated while being encrypted. In this way, a third party can process the information in its encrypted form, without being able to understand it. With this approach, the full utility of the data can be preserved, as the mathematical structures underlying the data are preserved.

A fly in the ointment

The initial developments of HE led to high computational overhead, making their use in ML applications impossible. However, recent developments have led to a number of privacy-preserving ML solutions. Despite the progress that has been made, the majority of schemes provide high security, but also a significant computational overhead, and only a small number of operations are allowed to still be able to perform the decryption. So real-world use is limited. Two major challenges arise for using deep learning for data analysis: when compared to the plaintext versions computations are orders of magnitude slower, and noise accumulates with each operation. Furthermore, no scheme is available for operating directly on floating point numbers.

ASCAPE's approach for secure Deep Learning over medical data

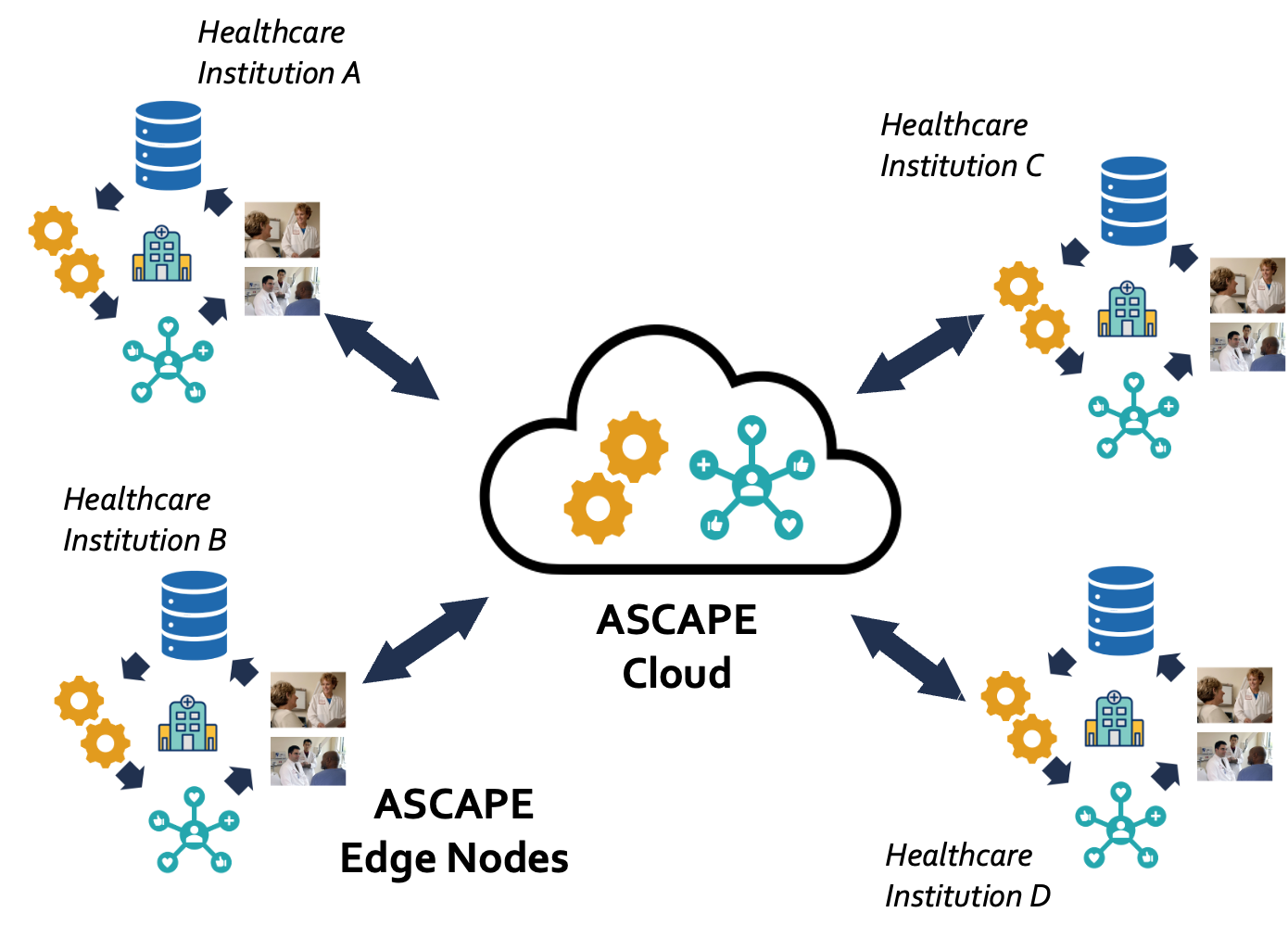

ASCAPE uses HE as a privacy measure to enable both training and prediction of machine learning models on encrypted patient data. We follow an approach where the training of the models takes place on distributed independent servers where we get a homomorphically decrypted output. Encryption and decryption use a secret key that is known to the collaborating ASCAPE Edge Nodes at hospitals but not to the cloud. Therefore, encryption and decryption operations can only be performed on Edge Nodes that share the same secret key. At the same time, the ASCAPE cloud can be used for both training and inference of models without access to unencrypted data.

The ASCAPE HE design brings several improvements. It is noise-free, non-deterministic, and adapted to directly support floating-point arithmetic. In addition, it allows a wider range of operations to be performed on encrypted data, including non-linear functions. Most importantly, through HE for secure Deep Learning, ASCAPE ensures data privacy and security in accordance with GDPR and other national regulations.

Are you eager to go into more detail on Homomorphic Encryption? We have you covered! Read our technical report or a scientific paper derived from ASCAPE research!